Bob Jarvis is an audio-visual artist and programmer from Melbourne who recently caught our eye with his Max For Live centred projects, most notably VIZZable, a suite of plugins for video performance and manipulation. Ever wanted an easy way to integrate reactive and interesting visuals into a live performance? Then read on – we sat down for a chat with the man behind the software about his projects, performances, and what’s in the works…

Tell us a little about yourself…

My name’s Robert Jarvis, people call me Bob, I make music as Zeal but these days I mostly work on video and audio-visual projects. I studied Jazz in Adelaide playing bass and then moved to Melbourne in 2010 where I’m now doing my masters in computer science. I started getting interested in video by producing stop motion music videos, which became a gateway into VJing. I also started working with max for my live Zeal shows when I wanted to be able to play a guitar hero controller as an instrument. All of that sort of lead me to where I am now, developing software and systems for audio-visual composition and performance. I like building my own tools and get as much of a creative kick out the software development process as I do out of composition, production and performance.

And what’s the history of VIZZable thus far?

When Max for Live dropped, the first things I started experimenting with was video. Early experiments included simple midi triggering of clips and effects automation. Max For Live was pretty rough back then but the potential was exciting. I figured someone out there would release some amazing video Max For Live patch and I could just play with it but the whole video thing didn’t take off as I’d expected. An update to Max 5 brought VIZZIE, Max’s easy to use video processing devices. VIZZIE’s great because it’s very high level and very fast to get something going as opposed to Jitter which can be fiddly and technical – VIZZIE is to Jitter as Duplo is to Lego. The first incarnation of VIZZable was basically just the VIZZIE devices with there controls ripped out and replaced with Ableton controls and with a simple system of send and receives to pass video from device to device..and it worked! I built a few other devices – like a MIDI triggered video player and put it out. In Amsterdam, at the exact same time, Fabrizio Poce was putting out a suite of video plugin’s he’d been working on called V-Module. Coincidentally, He’d adapted the same implementation of send and receive devices for passing video between devices so with a little bit of work on my end, both of our suites could work together. We had a chat on the phone and started up a google group for Max For Live visuals which has about 300 members now.

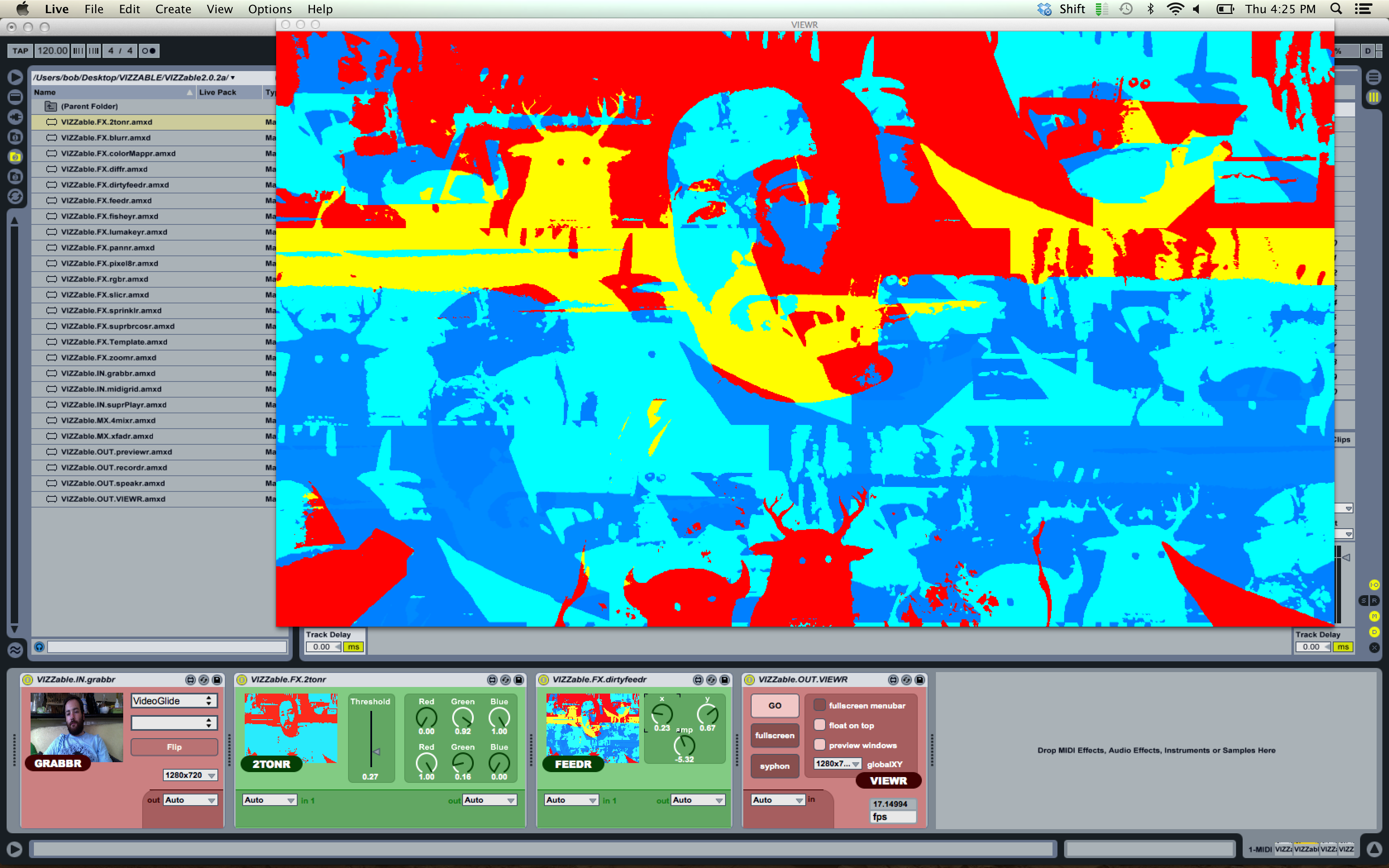

The goal for VIZZable now is to make high quality, easy to use tools for musicians wanting to build visuals into their live set as well as artists working primarily with video. Being highly integrated into Ableton opens up a lot of potential for automation and user interaction, so it’s well suited for interactive installations and other non-performance situations.

What is VIZZable 2 and where is it at, development-wise?

In version 2, VIZZable’s been completely rewritten so it no longer has any code from the original VIZZIE modules, but I’ve tried to maintain their Duplo-like nature. A lot of work has been done on the auto-connect system used to pass video between devices. Chris Gough from the google group has developed a very slick system for passing video between devices, which works a bit like Live’s native audio side chaining. It presents a list of the video devices in the set and lets you pipe video around very easily and flexibly.

For the nerds, VIZZable now works exclusively with GL Textures which are a gazzilion times more efficient than the matrices VIZZIE uses. It means on modern hardware you can run a lot of video devices and keep a high frame rate. All the FX processing is done using Gen which is a new feature in Max 6. It’s very fast and powerful and there’s an FX template in the suite so that anyone wanting to get their hands dirty can build their own FX.

For me, the highlight of the new version is the clip player device that lets you drop videos onto clip slots and work with them the same way you work with audio. I think there’s a lot of potential for video mashup sort of work and it’s just a feature I’ve wanted to see in Live since I started working with it.

Do you know of any cases of interesting uses out in the wild? Anyone using it on stage or for commercial releases?

It’s hard to know exactly how far VIZZable’s reach is. We have a lot of active users on the google group using it regularly for gigs but that’s just a slice of all users. I’ve seen some interesting work by cellist using motion controllers to process audio and video simultaneously. I’ve also seen people using it in theatre and I see experiments pop up on YouTube and Vimeo. It’s great seeing work people create with VIZZable, I’m looking forward to seeing how people use the new features.

Tell us about your most recent project…

The current project in the pipeline is an improvised live audio-visual piece with a band I VJ with called Virtual Proximity. I’ve been writing some Max For Live plugins to enable the musicians to control The Melbourne Town Hall Grand Organ allowing them to open and close stops from within Ableton as well as automating the swell pedals etc. MIDI data’s being passed from the musicians over to me via OSC (and some more M4L plugins) where it’s being sent into VIZZable for generating responsive visuals. We’re also using David Butler’s DMaX suite for controlling lights in response to drums. Audio, video and lighting is all being controlled by Ableton and M4L as well as the 4 storey pipe organ. With Max integrated, Ableton becomes more of a universal sequencing and performance tool with which you can control anything (maybe next will be robots ).

The previous project was a collaboration with Melbourne singer and composer Gian Slater and her choir Invenio. We pulled the audio from each singers microphone into Ableton where a Max For Live plug-in analysed the amplitude and sent that out over OSC to a desktop computer running Derivative TouchDesigner. The visuals were generated in TouchDesigner and projected back onto the singers so that when a singer sang, the visuals projected onto them would react. Gian arranged the piece to create patterns of light on the singers. The project was a test run to assess the feasibility of the concept and it was very successful so we’re aiming to scale the show up to incorporate more singers.

Download VIZZable and find out more about what Bob’s projects here. Check out this introductory video on the VIZZable software.

Head on over to check out part one of our new Best of Max series here, in which we’ll be showing you the best Max for Live tools we’ve found and how to put them into use in your music.

Subscribe to our newsletter to keep up with our latest free tutorials, samples, video interviews and more to educate & inspire your music production.

Learn more about Producing Music with Ableton Live.